Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Chris BaraniukTechnology reporter

The Washington Post via Getty Images

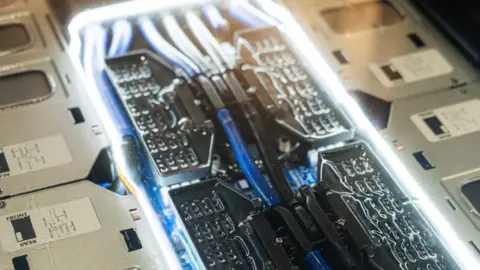

The Washington Post via Getty ImagesThey work at high speed 24/7 and get very hot – but data center computer chips are well taken care of. Some of them basically live in spas.

“We’ll have the liquid rise and (then) pour down or drip onto the components,” said Jonathan Ballon, CEO of liquid cooling company Iceotope. “Something will get sprayed.”

In other cases, these hard-working gadgets recline in a bath of circulating liquid, which removes the heat they generate, allowing them to run at very high speeds—what’s known as “overclocking.”

“Our customers overclock all the time because there is zero risk of burning out their servers,” Mr. Barron said. He added that a hotel chain client in the United States is planning to use heat from hotel servers to warm guest rooms, hotel laundry rooms and swimming pools.

Without cooling, data centers collapse.

In November, a cooling system failure in a U.S. data center took financial trading technology offline at CME Group, the world’s largest exchange operator. The company has been established since then extra cooling capacity to help prevent this from happening again.

Currently, demand for data centers is booming, in part due to the development of artificial intelligence technology. but huge energy The water consumed by many of these facilities means they are increasingly controversial.

More than 200 environmental groups The United States recently called for a moratorium on new data centers in the country. But some data center companies say they want to reduce its impact.

They had another motive. Data center computer chips are becoming more and more powerful. So much so that many in the industry say traditional cooling methods, such as air cooling, where fans constantly blow air toward the hottest components, are no longer sufficient for some operations.

Mr. Barron knows Controversy continues to rise Building around energy-hungry data centers. “The community is resisting these projects,” he said. “We need much less electricity and water. We don’t have any fans – we run quietly.”

ice level

ice levelIceotope says its liquid cooling approach, which relieves multiple components in the data center (not just processing chips), can reduce cooling-related energy needs by up to 80%.

The company’s technology uses water to cool the oil-based liquid that actually interacts with computer technology. But the water remains in a closed loop, so there is no need to constantly draw more water from local supplies.

I asked the company if the oil-based fluids in the cooling system are derived from fossil fuel products, and he said some of them are, but he emphasized that they are PFAS-free, also known as forever chemicalsharmful to human health.

Some liquid-based data center cooling technologies use refrigerants containing PFAS. Not only that, but many refrigerants produce potent greenhouse gases that have the potential to exacerbate climate change.

Two-phase cooling systems use this type of refrigerant, said Yulin Wang, a former senior technical analyst at market research firm IDTechEx. The refrigerant starts out as a liquid, but heat generated by server components causes it to evaporate into a gas. This phase change absorbs a lot of energy, meaning it is an efficient form of cooling.

In some designs, data center technology is completely immersed in large amounts of PFAS-containing refrigerants. “Steam will escape from the tank,” Mr Wang added. “There may be some safety concerns.” In other cases, the refrigerant is piped directly to the hottest components (the computer chips).

Some companies that offer two-phase cooling are currently switching to PFAS-free refrigerants.

Yulin Wang

Yulin WangOver the years, companies have tried wildly different cooling methods, racing to find the best way to keep data center equipment running well.

Microsoft is famous sink a tubular container For example, servers filled with servers enter the sea near the Orkney Islands. The idea is that the cold Scottish seawater will increase the efficiency of the air cooling system inside the unit.

Last year, Microsoft confirmed it had shut down the project. But Alistair Speirs, general manager of global infrastructure for Microsoft’s Azure business group, said the company learned a lot from it. “Without a (human) operator, there’s less opportunity for error — and that informs some of our operating procedures,” he said. Data centers that are more hands-off appear to be more reliable.

Preliminary findings show the subsea data center has a Power Usage Effectiveness (PUE) rating of 1.07, indicating it is significantly more efficient than the vast majority of land-based data centers. And it requires zero water.

But in the end, Microsoft concluded that the economics of building and maintaining undersea data centers were not ideal.

The company is still working on liquid-based cooling concepts, including microfluidics, in which tiny channels of liquid flow through multiple layers of a silicon chip. “You can imagine a labyrinth of liquid cooling through nanoscale silicon,” Mr. Spears said.

Researchers have proposed other ideas, too.

In July, Renkun Chen and colleagues at the University of California, San Diego, Publish a paper detailed their idea for a pore-filled membrane-based cooling technology that could help passively cool the chip without the need to actively pump liquid or blow air around it.

“Essentially, you’re using heat to power the pump,” Chen said. He compared it to the process by which water evaporates from leaves, creating a pumping effect that draws more water through the plant’s trunk and branches to replenish the leaves. Professor Chen said he hopes to commercialize the technology.

Sasha Luccioni, director of artificial intelligence and climate at machine learning company Hugging Face, said new ways of cooling data center technology are growing in popularity.

This is partly due to the need for artificial intelligence — including generative AI or large language models (LLMs), which are the systems that power chatbots. In previous researchDr. Lucioni has demonstrated that such technologies consume large amounts of energy.

“If you have a model that’s very energy-hungry, then the cooling has to be stepped up a notch,” she said.

inference modelThis explains their output through multiple steps, which is more demanding, she added.

They consume “hundreds or thousands of times more energy” than a standard chatbot that simply answers questions. Dr Lucioni called on AI companies to be more transparent about the energy consumed by their various products.

For Mr Ballon, LL.M.s are just one form of artificial intelligence and he believes they have “reached their limits” in terms of productivity.